From paper to patient, Pacmed’s AI newsletter #2: on interpretability

By Giovanni Cinà and Michele Tonutti (Data Scientists at Pacmed)

As AI agents grow in skills and complexity, the interaction between humans and machines becomes more important than ever. The true added value seems not to lie in Artificial Intelligence alone, but in the synergy between human and technology. Understanding well how the technology operates is key to the facilitation of this synergy. How can we understand and trust the decisions or predictions of an artificial intelligence? This question is even more pressing in the medical domain, where AI can directly influence the health of many.

In this issue of our newsletter we focus on explainable AI .This challenge holds a special place in our Pacmed hearts. Observational data and algorithms based on these data may not reflect the whole situation of the patient, so we think that the synergy between doctor and computer is needed for responsible deployment and clinical value. That is why we think our algorithms should always be explainable to the degree that we can guarantee safe use. We have curated a small selection of papers on this topic; this is by no means a comprehensive list but rather a good place to start if you are new to the issue or a check to see if anything important escaped your attention.

#1: Addressing the issues of interpretability from a general standpoint Interpretable machine learning: definitions, methods, and applications

By: James Murdoch, Chandan Singh, Karl Kumbier, Reza Abbasi- Asi et al https://arxiv.org/pdf/1901.04592.pdf

Interpreting Machine Learning models is a multi-faceted problem, involving many steps of a project, from feature engineering to deployment. Framing the issue, breaking down its complexity, inventorizing the different techniques and discussing examples: these steps are often overlooked in favor of the assessment of the latest and coolest models and methods.

The first paper we propose takes a step back and puts interpretability in a broader context — it is therefore a good primer for those that are not already acquainted with the topic.

Why it matters. Among other aspects, the authors stress the importance of relevancy, namely the selection of an interpretation method that is apt for the intended audience, thus putting human-machine interaction in the center. This approach resonates with us at Pacmed, since we strive to build models that are accessible specifically for medical personnel.

Furthermore, the article offers an overview of the different approaches and discusses a number of relevant examples where techniques are shown to (sometimes not) work.

#2: SHAP: a method for interpretable models inspired by game theory

A unified approach to interpreting model predictions

By: Scott Lundberg and Su-In Lee

http://papers.nips.cc/paper/7062-a-unified-approach-to-interpreting-model-predictions.pdf

One of the issues with interpretability methods is the high number of available approaches, and a lack of clear view on which one is the most suitable for which task. The 2017 paper by Lundberg and Lee, presented at NeurIPS, proposes SHAP, a unified approach based on additive feature importance, meaning that the output of a model can be approximated by the sum of the effect of each individual feature. The proposed solution is based on game theory and local approximation, and has been shown to be the only solution displaying certain desirable properties for explanation models: local accuracy, missingness, and consistency. The method unifies 6 existing methods, amongst which LIME, and three approaches from game theory.

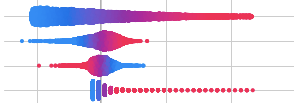

Why it matters. SHAP offers a new way to explain both global feature importance (i.e. which features contribute to the prediction of the trained model) and single observations (i.e. which characteristics of a specific sample contribute to the prediction for that sample); it works for virtually any type of model, including deep neural networks. Aside from the mathematical validity and innovativeness of the approach, the main advantage of SHAP from the point of view of the end-user is that it has been proved to be rather consistent with human interpretation, and it is relatively easy to interpret. In particular, the additivity aspect of the explanation model enables the importance of each feature to be understood intuitively in relation to the output of the model. From a computational perspective, it is also a somewhat efficient method, especially for tree-based algorithms, as explained in more detailed in a second paper by the same authors on the subject.

#3: Explaining the predictions of a model to prevent hypoxaemia during surgery using SHAP

Explainable machine learning predictions for the prevention of hypoxaemia during surgery

By: Scott Lundberg, Bala Nair, Monica S. Vavilala et al.

https://www.nature.com/articles/s41551-018-0304-0

Because of its enhanced interpretability and consistency, SHAP is particularly suitable for clinical applications. This paper by a research group from the University of Washington employs SHAP to provide intuitive and useful visual interpretation of patient-specific feature importance. The team, which includes two of the authors of the original SHAP paper, developed a machine learning model to predict hypoxaemia during surgery, enabling the surgical team to take action if needed. In order for such a prediction to lead to actionable insight, it is fundamental for the clinical staff to understand exactly what factors are responsible for the arisal of hypoxaemia, so that they can not only trust the output of the model, but also know what can be done to curb the risk.

Why it matters This paper presents a successful practical implementation of a theoretically-sound explainability algorithm applied to a medical decision-support tool.

The feature importance values provided by SHAP have been shown to align with medical expectations of what factors are known to be responsible for hypoxaemia during surgery, which shows the applicability of the tool to real-life problems. The additive contribution of the features also has the potential to highlight complex relationships between clinical parameters which may not be immediately clear during surgical operations. Lastly, the paper demonstrates the benefit of clear and appealing visualizations to explain a model’s predictions. Indeed, SHAP’s open source library allows captivating and interpretable graphs to be produced with just a few lines of code, going the extra mile to help doctors understand what goes on inside the complex machine learning algorithms.

#4: Eye disease detection: an original approach on explainable neural networks

Clinically applicable deep learning for diagnosis and referral in retinal disease

By: Jeffrey de Fauw, Joseph Ledsam, Olaf Ronneberger

https://www.nature.com/articles/s41591-018-0107-6

This article by Deepmind’s researchers is not exactly focusing on interpretability per se, as it presents a model to classify 3D eye scans. Optical coherence tomography (OCT) scans are widely used in diagnosing eye conditions and yet require considerable time to be analyzed from the medical staff, making them a perfect target for the application of automated techniques. The model described in the paper provides diagnoses in a swift and accurate manner, promising to be of great aid for early detection and effective treatment. In addition to the high performance of the model, the paper reports an enhanced interpretability compared to similar methods.

Why it matters. Instead of using additional techniques to approximate the behaviour of their neural network, the researchers decided to split the model in two parts, one that segments the 3D scans and one that performs the classification itself. The output of the segmentation model provides insights into the kind of features used by the classification model, but it is also a clinically valuable tool that medical personnel can use to interpret scans. This goes beyond explainability, actually providing information that can be used independently from the model’s prediction. Moreover, and this is the second selling point of the article in our opinion, the authors put extra effort into integrating the models in the clinical pathway: the output of the segmentation model is displayed with a clinical result viewer for easy understanding, while the segmentation model is trained and tested on different scanning machines to ensure its generalizability across devices. Worth your time.

#5: Optimizing neural networks for explainability via a decision tree Beyond Sparsity: Tree regularization of deep models for interpretability

By: Mike Wu, Michael Hughes, Sonali Parbhoo et al. https://arxiv.org/abs/1711.06178

Decision trees are often regarded as highly interpretable, given the fact that the user can take the sequence of splits in the tree as the rationale for why the model has returned a certain prediction on a specific outcome. However, these sort of explanations can also become unwieldy if (simplifying) the tree is too deep. The authors of the next paper introduce a technique to explain your high-performing black-box model via a simple decision tree that approximates the model itself.

Why it matters. The method proposes to add a term to the loss function of the neural network, which encodes the complexity of the tree associated to the model — i.e. the complexity of the model’s explanation. Re-training the model with this additional term will also minimize the complexity of the associated tree, thus in other words the model will optimize its own explainability. Alas, the perk of enhanced explainability might cost some performance.

The articles reports experiments on medical tasks data with interesting findings. We applied this technique to the Intensive Care (IC) data we used for our own models (see this for more details on our IC work) and found out that indeed the resulting explanation was neater: see our blog post for more explanation on this technique and our results.

Conclusion

Explainable AI is quickly rising to prominence as a field, yet it is still a young research area. Some important techniques are still being tested and discussed — see for example the debate on local explanation methods . For our formation as AI practitioners it is crucial to keep in touch with this area of research. However, that is not enough: we should also engage in a meaningful conversation with the general public. Clients, users, regulators, citizens; all these actors will be affected by AI one way or the other — they should have a say when it comes to deciding what kind of AI we want.

References

[1] James Murdoch, Chandan Singh, Karl Kumbier, Reza Abbasi- Asi et al. Interpretable machine learning: definitions, methods, and applications. arXiv:1901.04592v1 [stat.ML]. 2019 Jan 14.

[2] Scott Lundberg and Su-In Lee. A unified approach to interpreting model predictions. Electronic Proceedings of the 31st Neural Information Processing Systems Conference (NIPS 2017).

[3] Scott Lundberg, Bala Nair, Monica S. Vavilala et al. Explainable machine learning predictions for the prevention of hypoxaemia during surgery. Nature Biomedical Engineering, 2 (10), 749–760. 2018.

[4] Jeffrey de Fauw, Joseph Ledsam, Olaf Ronneberger. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nature Medicine 24, 1342–1350. 2018 Aug.

[5] Mike Wu, Michael Hughes, Sonali Parbhoo et al. Beyond Sparsity: Tree regularization of deep models for interpretability. arXiv:1711.06178 [stat.ML]. 2017 Nov.